The unique opportunity in conversational AI is that people are telling you exactly what they want, in their own words, all of the time.

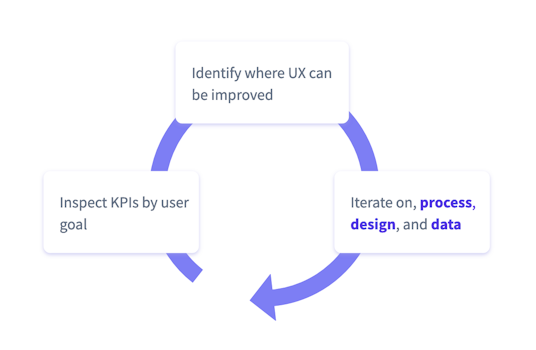

Our aim is to tap into that stream of insight and create a flywheel: a virtuous circle where AI gets better at interpreting people’s needs, we learn more about our users, and our AI helps more people do more things.

To get the flywheel rolling, a conversational team needs to know which KPIs they are accountable for, and they need a feedback loop to tell them how their efforts are impacting those KPIs. This third post in the 4-part series on CDD covers best practices for iterating and ensuring you are executing on opportunities to improve your bot.

Choosing KPIs

What KPIs will we track to determine this product’s success? You should have a provisional answer by the end of your discovery process, but do allow yourself some flexibility. The true usefulness of a metric reveals itself over time, and you might not be able to measure some of your KPIs until certain milestones have been met & infrastructure is in place. What matters most is establishing a habit of systematically tracking KPIs and evaluating your efforts to move the needle on them. Worry less about choosing the perfect metric right away, and instead pick 5-10 to track and focus on building good habits.

The business value of your AI assistant is likely to be derived from a lagging metric. Lagging indicators are ones which can only be measured by deploying your bot to real users. Some common KPIs are:

The first two can be measured automatically, while #3 and #4 require explicit feedback from users. These two types of metrics are complementary, and it’s good to have one of each, especially since only a single-digit % of your users will typically respond to your feedback questions.

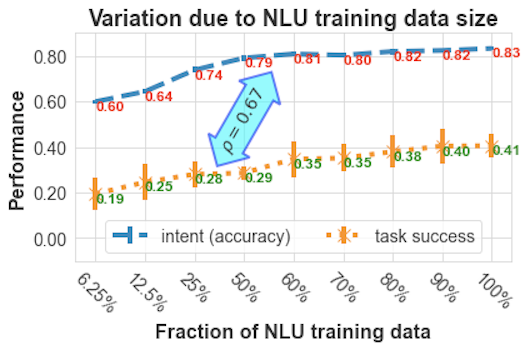

Leading indicators are proxies for the quality of your assistant which can also be evaluated “offline”, i.e. you can measure these on a new version of your assistant before pushing it live. The section on NLU evaluation metrics below provides some examples. While not a replacement, leading indicators are a helpful proxy to provide feedback during the development cycle. The chart below, taken from another blog post, illustrates the correlation between NLU performance and task success for one bot.

Connecting the Dots between Releases and Improvements

Anyone on your team should be able to say “in our latest release we worked on improving X and the result was Y”. It’s vital that the team is empowered with this feedback loop - without it, the best they can do is guess.

In any given sprint, you’ll be focused on improving 1-2 areas of your bot. To see the impact your efforts have, you need to be able to view a drilldown of your KPIs, broken down by the different user goals your bot supports.

At the end of a sprint, you will have improved the bot in certain ways, and anyone on your team should be able to say what the expected result will be. This is where drilldowns are especially important - an incremental change can disappear when aggregated with all other user goals.

The specific tools used to track release notes, plan sprints, and prioritize work will vary from team to team. Whatever you use, it is the responsibility of the product owner to establish a process similar to the one illustrated below - especially the final phase of looking back and determining if the efforts from the previous sprint had the intended effect.

The Double Backlog

Whether we make it explicit or not, every AI assistant defines a model of the world: what users can say, what topics exist, what functionality is in scope and what is out. In the final post in this series, we will discuss world models in more detail. One component we’ve already covered is the intent taxonomy.

You should maintain two separate backlogs for your AI assistant. One backlog is dedicated to iterative changes, and another to the disruptive changes that require a modification of your world model. For example, adding a few new training examples to improve the performance of one of your intents is a small, iterative change. If instead you recognize that you need to split an intent in two, that’s a disruptive change that requires a change to your world model, and therefore also requires communication across the team. The table below lists examples of both kinds of changes.

Whether performing conversation analysis or reviewing the results of an NLU evaluation, you’ll notice opportunities to improve your assistant. Some of these will be iterative, some disruptive. The process for executing on iterative changes can be extremely lightweight, so separating these out can help you maintain high velocity. Disruptive changes, by nature, require more effort, and ought to be prioritized with some product management rigor. This is where it pays off to be very deliberate.

Quality Assurance and Evaluation

Quality Assurance (QA) is about verifying that the solution we’ve developed works as intended. Evaluation is about determining how effective our solution is in the real world. In AI, we can do QA by verifying that certain known inputs will generate the expected results, whereas, for evaluation, we need to estimate how well our model will generalize to unknown inputs. We’ll cover evaluation here and leave the topic of QA for the final post in this series.

Iterating on your data: NLU model performance

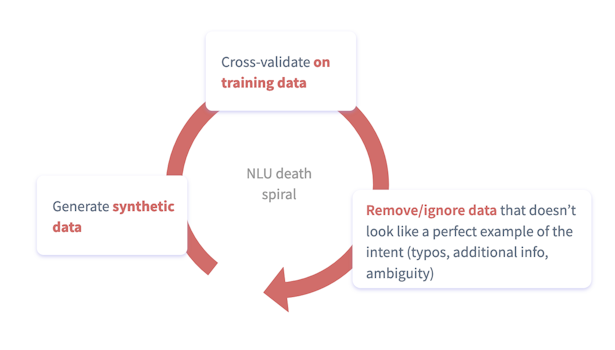

The absolute most important thing about iterating on your NLU model is ensuring that your evaluation is grounded in reality. I’ve worked with teams whose bots were handling almost a million conversations per month and who thought their model accuracy was in the mid-eighties, when the real number was 41%. This is what happens when you test on synthetic data. You have to evaluate your NLU model against production data - this is not negotiable.

Building up a ground truth database.

We evaluate the quality of our NLU model based on how often it makes the correct prediction when a user sends a message.

To measure that, we need a database of messages that users have sent to our bot along with the ground truth, i.e. what the correct answer is. The ground truth is sometimes referred to as a “gold label”. Those gold labels come from humans who understand the end user as well as the intent taxonomy. Adding these gold labels is called annotation.

Shortly, we will describe a process for maximizing the efficiency of your annotation work that’s suitable for mature projects with multiple annotators. But if you are just starting out, it’s better not to overthink it. Take a sample of production messages and just go through and annotate them. A sample of 50 production messages is already a better way to evaluate than relying on your training data.

Once you’ve annotated a batch of messages, you can organize these by intent to get a breakdown of performance. The table below has some of the most common metrics to track. This evaluation, while manual, is the most direct and unassailable way to assess the performance of your model. It also continuously adds to your ground truth database - the collection of real user messages with gold labels attached. That pool of data is useful both for future model evaluations and as a source of training data for your model.

NLU Evaluation Metrics

Performance for each intent:

Performance for each entity:

Advanced Annotation Processes

As you scale up the feedback loop of deploying a new model, annotating messages, and augmenting your training data, it becomes important to maximize the value you’re getting from the time you invest in annotating.

Annotation starts with a batch of user messages, along with the model’s predictions, from a specific time window (e.g. the previous week). This batch typically gets filtered and sampled to keep the amount of data manageable.

- First, you can filter out any messages with an exact match in your ground truth database. You already have a gold label for these and don’t need to duplicate efforts.

- Then, filter for specific (predicted) intents related to the focus of your current sprint.

- Optionally, filter by specific confidence ranges. (Note of caution: just because an ML model reports high confidence doesn’t mean it was right! This video is a good primer on confidence values.)

- Finally, randomly subsample to reduce the total number of messages.

There are also more advanced ways to detect where your model is likely to have made an error, for example by using the unexpecTED intent policy.

Augmenting your training data

Every time you complete a batch of annotations, you generate a new set of training examples that you can use to teach your NLU model to understand how your users actually talk. So, which utterances from your ground truth database should become part of your training set? It’s easy to overthink this, and I have seen teams waste a lot of time debating the best heuristics for sampling data here. But you don’t have to guess! You now have two solid ways to evaluate your NLU model:

- By running your model against your ground truth database and counting how often its predictions are correct

- By completing your next batch of annotations (post-deployment) and counting how often its predictions are correct

Any intuition you have about what should and shouldn’t go into your training data can be tested quickly and objectively.

Iterating on your Design & Process

I recently met with an enterprise team whose bot was underperforming. At the beginning of our working session, I asked them: “Give me some insights into your current user experience. What user goals are doing well, and which aren’t? Where are the biggest issues in your UX?”. They responded by showing me their designs and the business logic behind their bot. I tried again: “No, what I want to see is how users are actually interacting with your bot. Where are they struggling?”. Again, I was shown implementation details.

Eventually, I got through to them, and we started looking at some real end-user conversations together. To understand how your bot is performing, you have to get out of your bubble and look at the conversations that are actually happening, not at the ones you designed.

Doing this well requires both quantitative and qualitative analysis. The point of this post is to arm you with the tools you need to move the needle on your KPIs, but you won’t get there by only looking at numbers.

Unlike a web or mobile app, in conversational AI we get to see a transcript that gives us unique insight into what the user was trying to do and where they got stuck. A transcript is never the full picture — after all, we can only guess as to the broader context of the user: where they are, what else they’ve been doing —but we already get much richer information than we ever would from analyzing a sequence of button clicks.

Humans are incredible pattern matchers and empathy machines, and those skills are indispensable for making more pleasant AI assistants.

Conversation Analysis

For all the reasons above, it’s important that everyone on your team regularly spends time actually looking at some conversations from end users. As with annotation, start by forming a habit, then refine your process. The product owner is responsible for establishing this habit across the team - whether it's done individually or as a group.

While reviewing conversations, you can expect to find many of the same kinds of things as you did while prototyping. Prompts may need to be rewritten, intents may need to be refactored, and sometimes you will need to simplify the underlying business logic. When you find an opportunity to improve the UX, the obvious question is: "how often does this happen?". Adding tags to conversations is one way to keep track of patterns. It allows a product owner to estimate the impact of an issue, and makes it easy for a UX lead to discover relevant conversations when redesigning a particular flow.

As you scale out your practice of conversation analysis, many of the same filtering heuristics from the section on NLU annotation can be applied. In addition, you can target your efforts by reviewing conversations where specific events did or did not take place. For example, you may want to look at conversations where users expressed a particular intent, and at the end of the conversation gave negative feedback.

Conclusion

We've covered a lot of ground in this post: How to choose KPIs, how to establish a systematic way to improve them, and how to identify and execute on opportunities to improve your bot.

In the final post in this series, I'll talk about how to scale this out to more users, markets, languages, and teams.